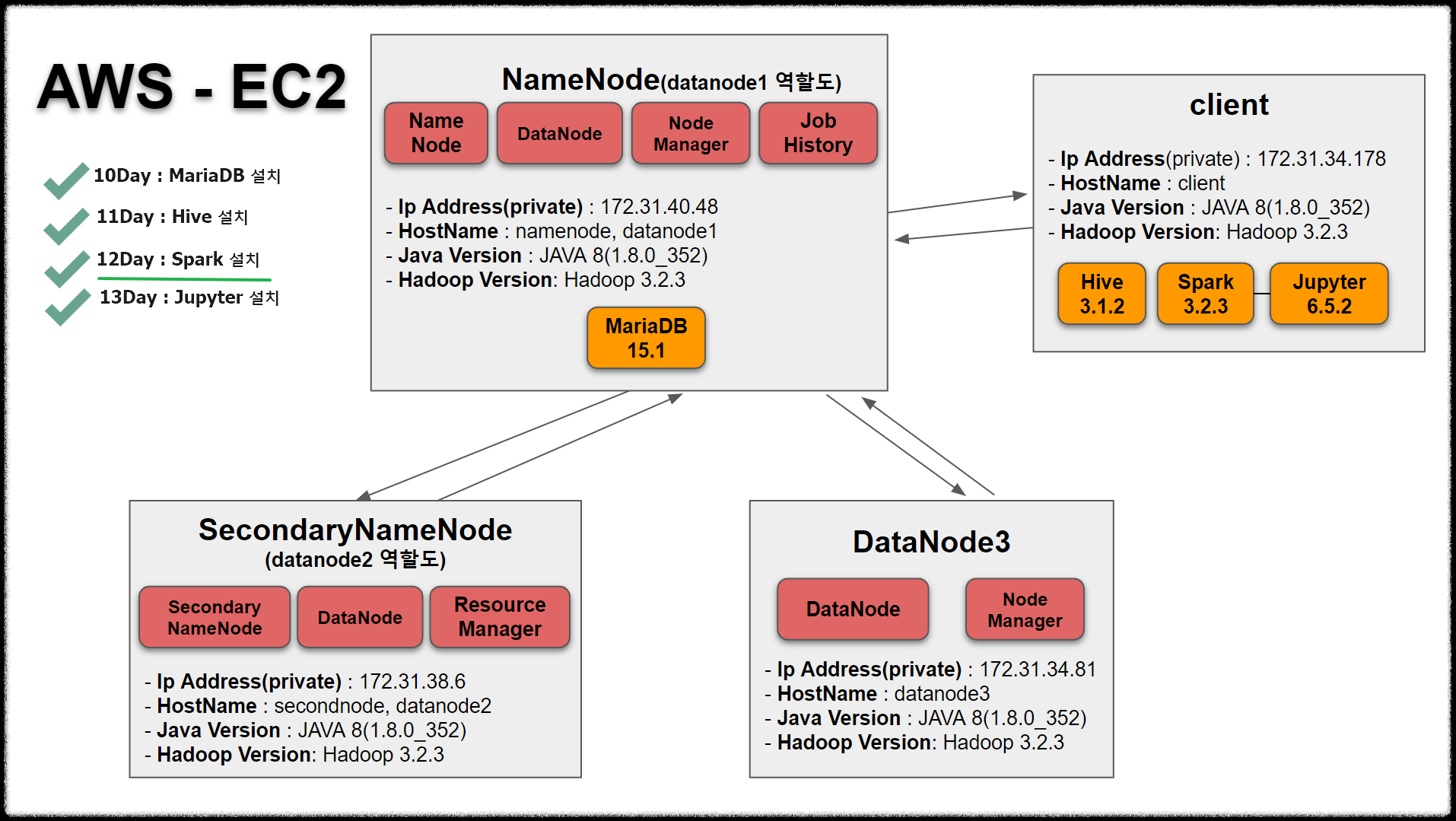

| SystemStructure

12일째인 오늘은 Spark를 설치하겠습니다.

목차

1. Spark 설치

1.3 Spark conf 파일 생성 및 수정

2. pyspark 실행

3. Spark 에러

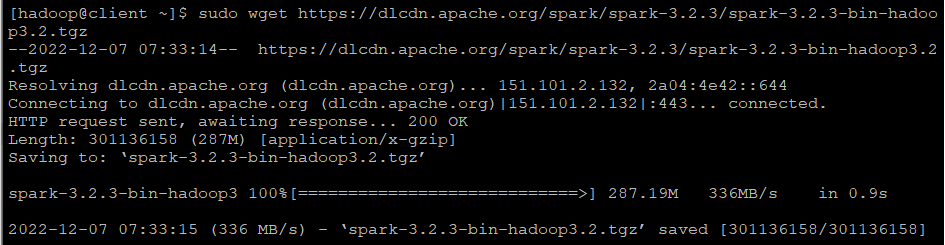

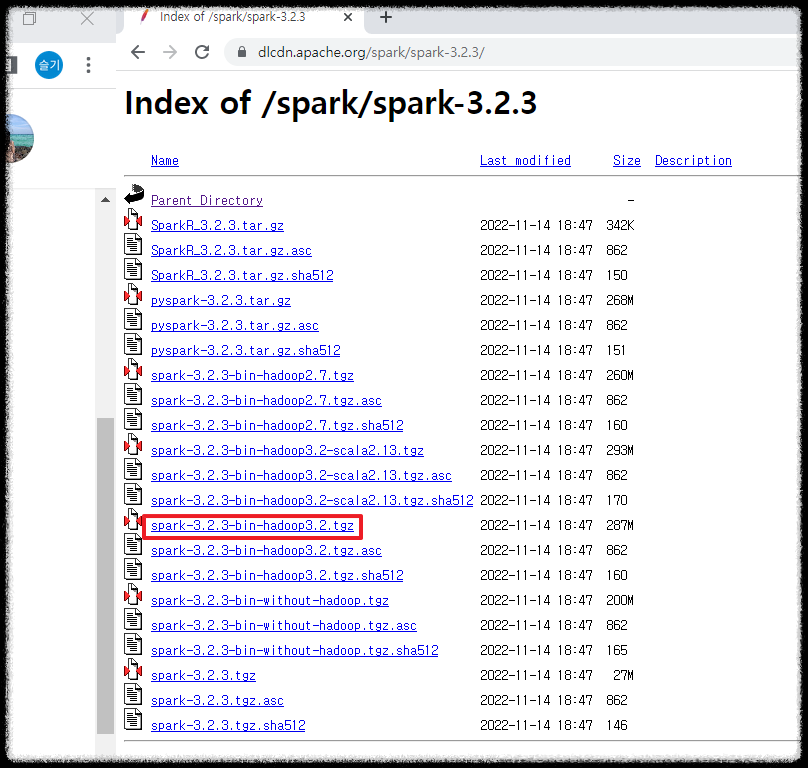

1. Spark 설치

[hadoop@client ~]$ sudo wget https://dlcdn.apache.org/spark/spark-3.2.3/spark-3.2.3-bin-hadoop3.2.tgz

1.1 압축 풀기

[hadoop@client ~]$ tar xvf spark-3.2.3-bin-hadoop3.2.tgz

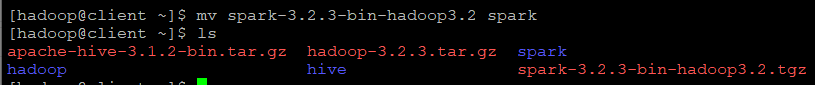

1.2 이름변경

[hadoop@client ~]$ mv spark-3.2.3-bin-hadoop3.2 spark

[hadoop@client ~]$ ls

1.3 Spark conf 파일 생성 및 수정

slaves, spark-defaults.conf, spark-env.sh 파일 생성

1.3.1 Slaves

[hadoop@client spark]$ cd /home/hadoop/spark/conf

[hadoop@client conf]$ vi slaves

datanode1

datanode2

datanode3

1.3.2 spark-defaults.conf

[hadoop@client conf]$ cp spark-defaults.conf.template spark-defaults.conf

별도의 수정 X

1.3.3 spark-env.sh

[hadoop@client conf]$ cp spark-env.sh.template spark-env.sh

[hadoop@client conf]$ vi spark-env.sh 맨 아래에 추가

export HADOOP_CONF_DIR=/home/hadoop/hadoop/etc/Hadoop

export YARN_CONF_DIR=/home/hadoop/hadoop/etc/hadoop

export SPARK_DRIVER_MEMORY=1024m

export SPARK_EXECUTOR_INSTANCES=1

export SPARK_EXECUTOR_CORES=1

export SPARK_EXECUTOR_MEMORY=1024m

export SPARK_WORKER_DIR=/data/spwork

export SPARK_PID_DIR=/data/sptmp

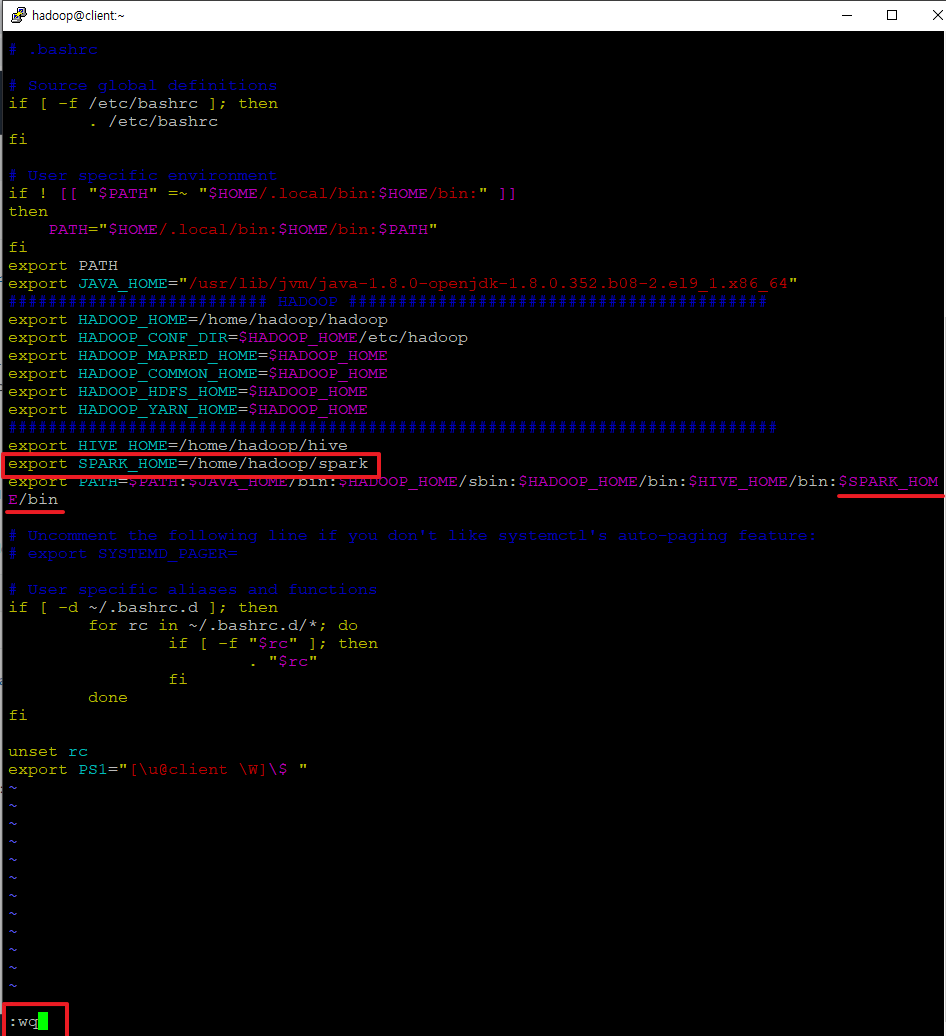

1.4 환경설정

[hadoop@client ~]$ vi ~/.bashrc

export SPARK_HOME=/home/hadoop/spark

export PATH=$PATH:$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$HIVE_HOME/bin:$SPARK_HOME/bin

Esc -> wq

[hadoop@client ~]$ source ~/.bashrc

[hadoop@client ~]$ echo $SPARK_HOME

2. pyspark 실행

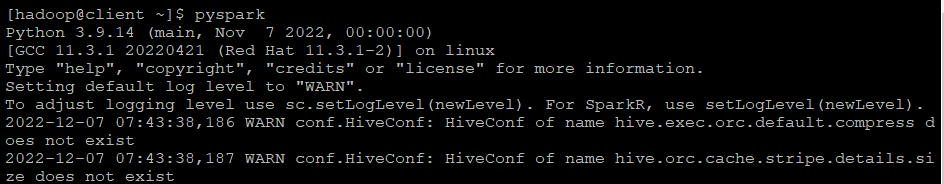

[hadoop@client ~]$ pyspark

Python 3.9.14 (main, Nov 7 2022, 00:00:00)

[GCC 11.3.1 20220421 (Red Hat 11.3.1-2)] on linux

Type "help", "copyright", "credits" or "license" for more information.

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

2022-12-07 07:43:38,186 WARN conf.HiveConf: HiveConf of name hive.exec.orc.default.compress does not exist

생략 ...

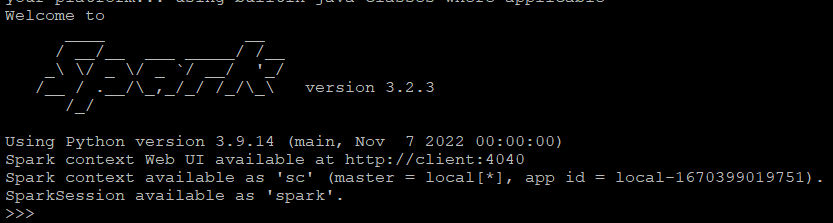

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/__ / .__/\_,_/_/ /_/\_\ version 3.2.3

/_/

Using Python version 3.9.14 (main, Nov 7 2022 00:00:00)

Spark context Web UI available at http://client:4040

Spark context available as 'sc' (master = local[*], app id = local-1670399019751).

SparkSession available as 'spark'.

>>>

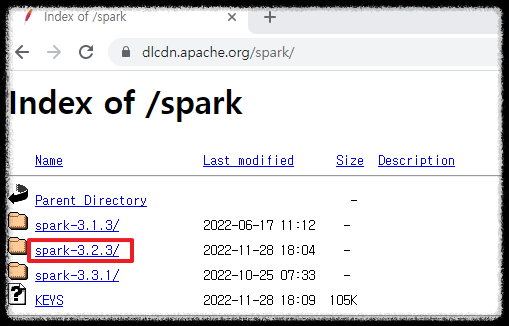

3. Spark 에러

[hadoop@Master]sudo wget https://dlcdn.apache.org/spark/spark-3.2.3/spark-3.2.3-bin-hadoop3.2.tgz

[sudo] password for hadoop:

--2022-11-30 06:52:48-- https://dlcdn.apache.org/spark/spark-3.2.2/spark-3.2.2-bin-hadoop3.2.tgz

Resolving dlcdn.apache.org (dlcdn.apache.org)... 151.101.2.132, 2a04:4e42::644

Connecting to dlcdn.apache.org (dlcdn.apache.org)|151.101.2.132|:443... connected.

HTTP request sent, awaiting response... 404 Not Found

2022-11-30 06:52:48 ERROR 404: Not Found.

위와 같은 에러가 생겨서 사이트에 집적 접속해보니 금새 3.2.2가 업로드 되어 3.2.3가 올라왔네요.

'💻 개발과 자동화' 카테고리의 다른 글

| [CentOS7] Oracle 11g 설치 (0) | 2023.06.22 |

|---|---|

| [AWS-Hadoop|Hive|Spark] jupyter 설치 후 pyspark와 연동 (0) | 2022.12.14 |

| [AWS-Hadoop|Hive|Spark] Hive 에러 모음 (0) | 2022.12.12 |

| [AWS-Hadoop|Hive|Spark] Hive 3.1.2 설치 (Mysql, Hadoop 연동) (0) | 2022.12.12 |

| [AWS-EC2 Hadoop|Hive|Spark] yum으로 MariaDB 10.5 설치 (0) | 2022.12.11 |

댓글